Click to view

Facebook’s original decision: Facebook left this one up.

Facebook’s response: Facebook said its rules allow attacking an ideology but not members of the religion.

Our analysis of how Facebook implements its hate-speech rules shows that its content reviewers often make different calls on whether to allow or delete items with similar content. To highlight this inconsistency, 3 pairs of posts on the same themes are shown below, along with Facebook’s decisions in each case. They are followed by 43 other posts that we brought to Facebook’s attention, and the actions that the company took. Facebook acknowledged it made a mistake in 22 instances and defended 19 of its rulings. Related Story.

Facebook’s original decision: Facebook left this one up.

Facebook’s response: Facebook said its rules allow attacking an ideology but not members of the religion.

Facebook’s original decision: Facebook initially left this one up. But after being contacted by ProPublica, the company said it was a mistake and took it down.

Facebook’s response: Facebook said the cartoon attacks members of a religion, rather than the religion itself, which violates its hate speech guidelines.

Facebook’s original decision: Facebook left this one up.

Facebook’s response: Facebook said this does not contain an attack on a protected category of people.

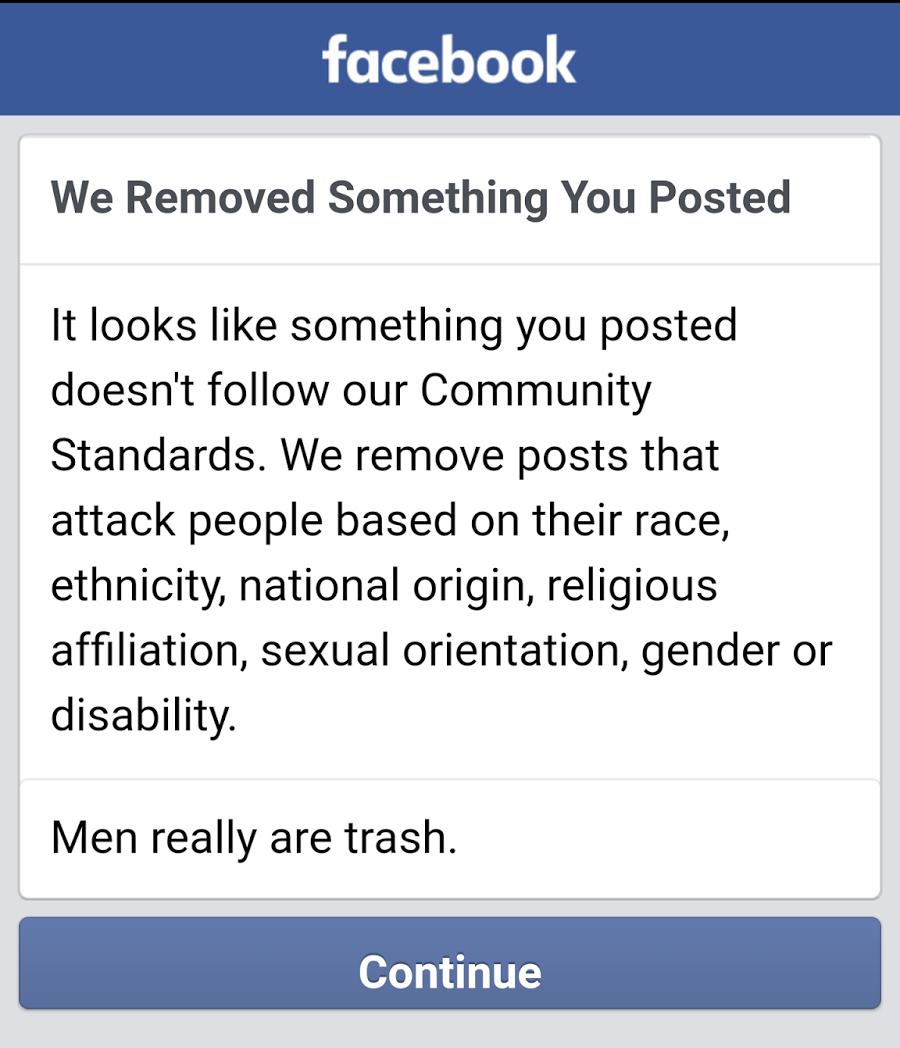

Facebook’s original decision: Facebook took this one down.

Facebook’s response: Facebook said it didn’t have enough information to comment on this decision.

Facebook’s original decision: Facebook initially left this one up. But after being contacted by ProPublica, said it was a mistake and took it down.

Facebook’s response: Facebook said the photo was in an album with many pictures, some of which violated its policies against bullying and hate speech.

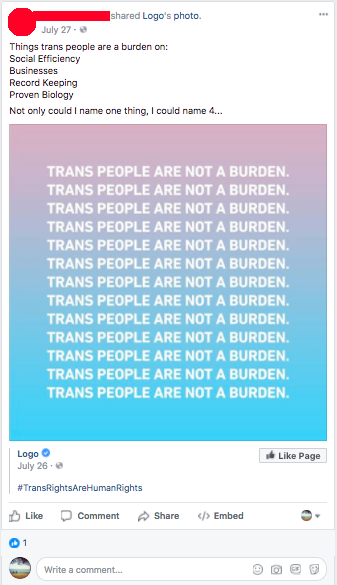

Facebook’s original decision: Facebook took this one down.

Facebook’s response: Facebook said this post violates its hate speech policies because it is a gender-based attack.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies, because attacking the members of a religion is not acceptable, but attacking the religion itself is acceptable.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies, because attacking the members of a religion is not acceptable, but attacking the religion itself is acceptable.

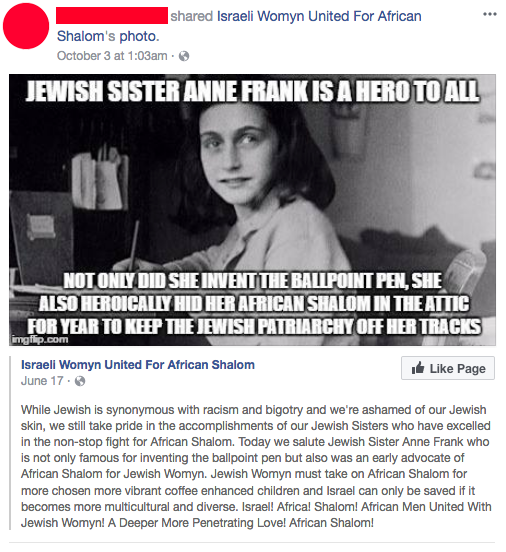

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies because there is no attack and no protected characteristic.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

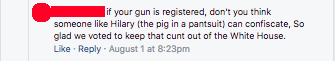

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies. Standard hate speech protections do not apply to public figures.

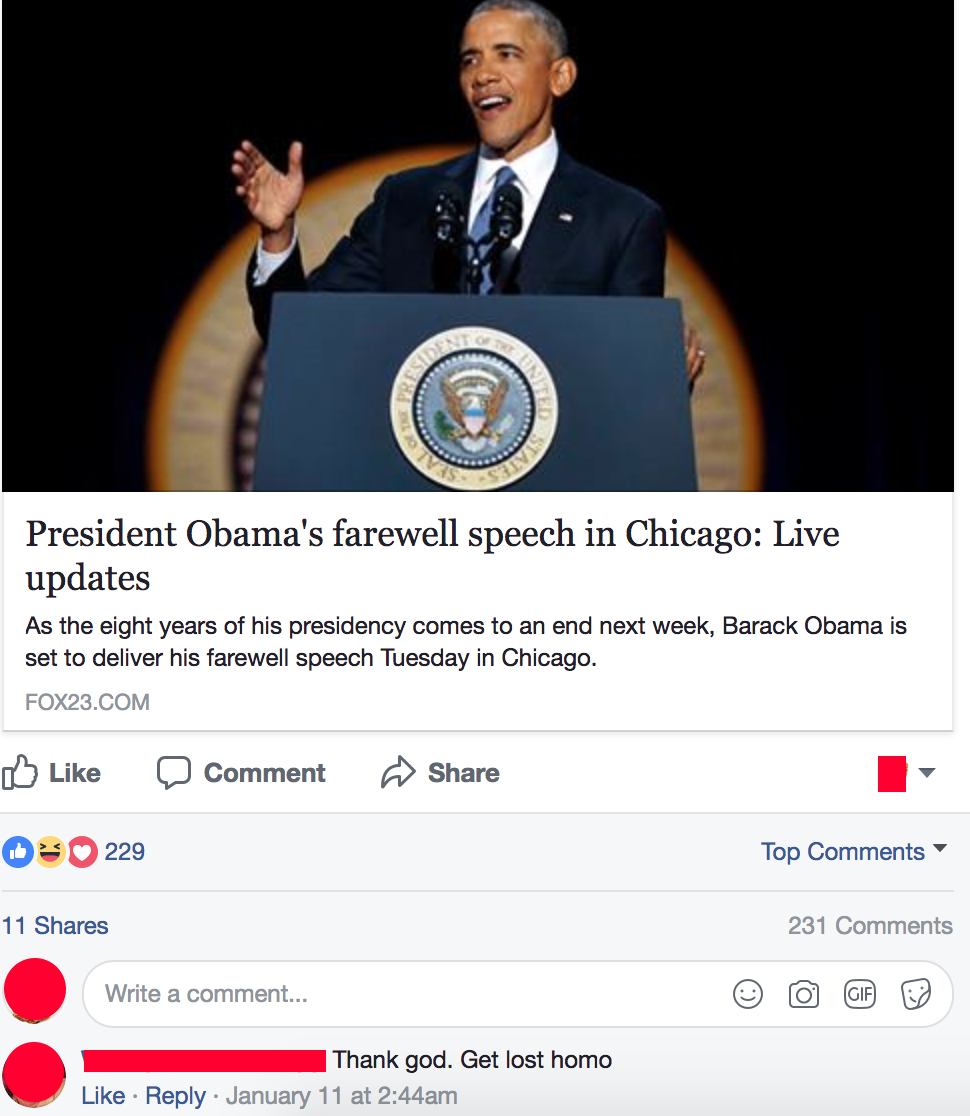

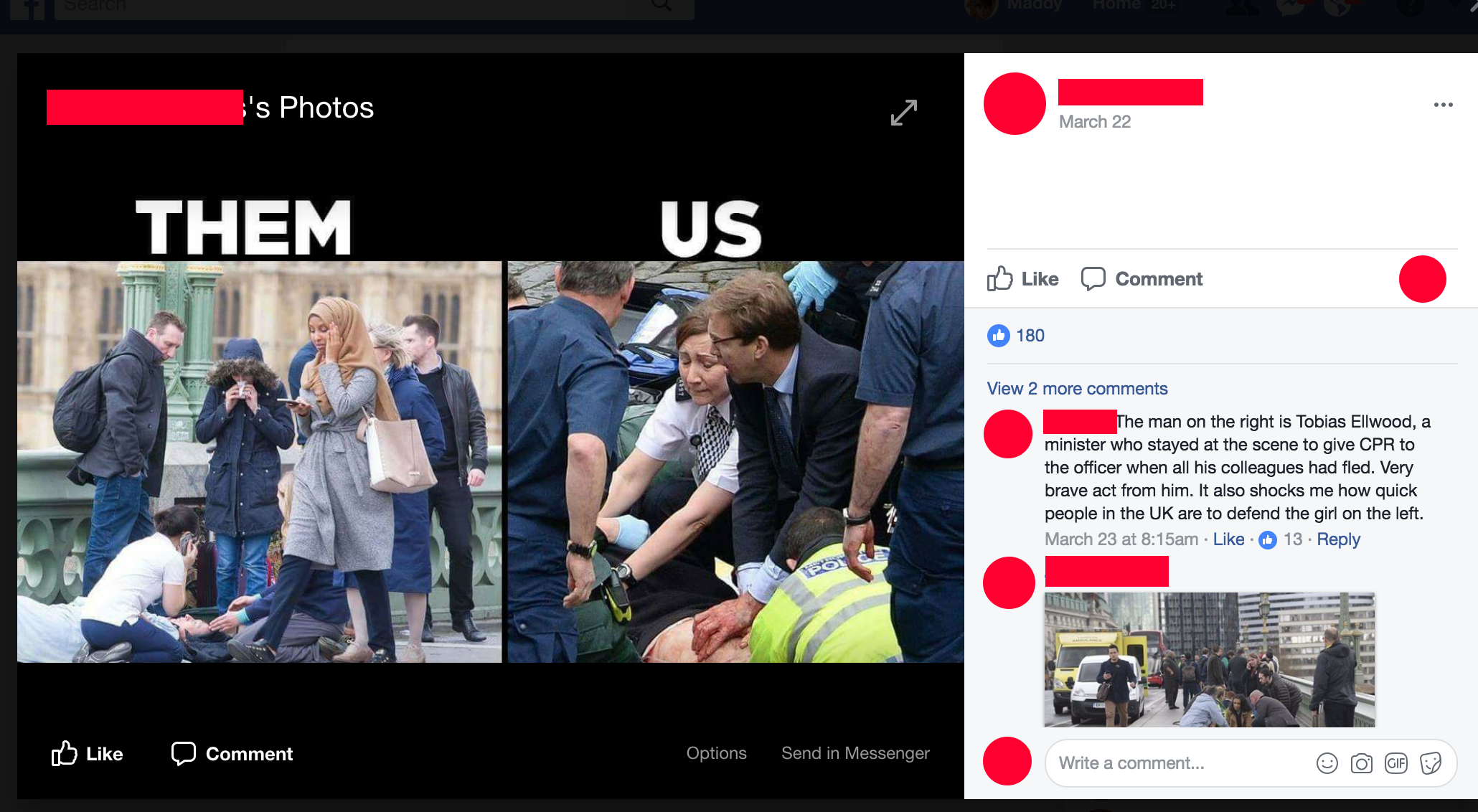

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the comment up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

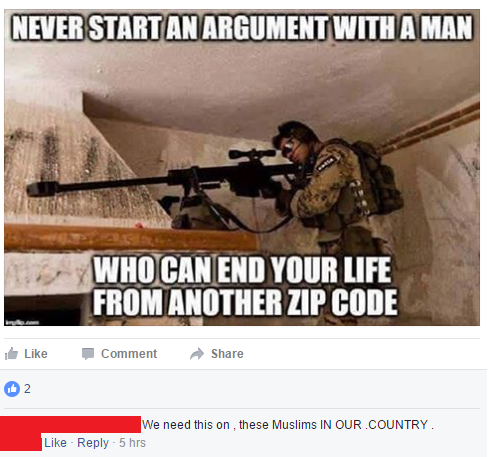

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

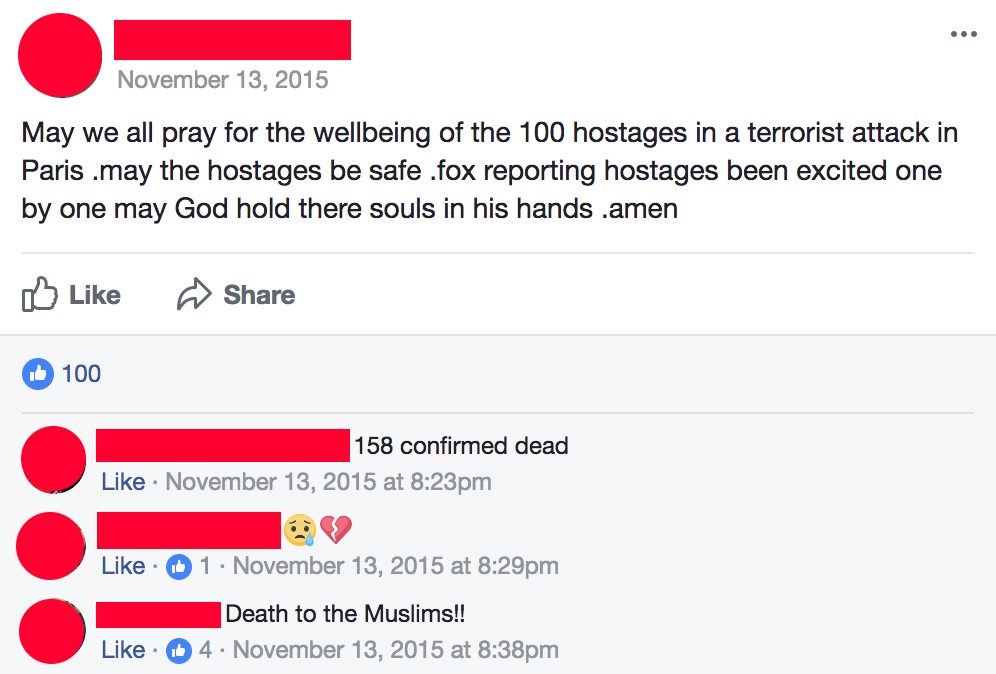

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the comment up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies against hate speech, but would requires a warning for graphic content. There is no explicit attack against a protected group.

Facebook’s original decision: Took it down.

Facebook’s response: This violates Facebook's hate speech policies.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies. There is no explicit attack on a protected group.

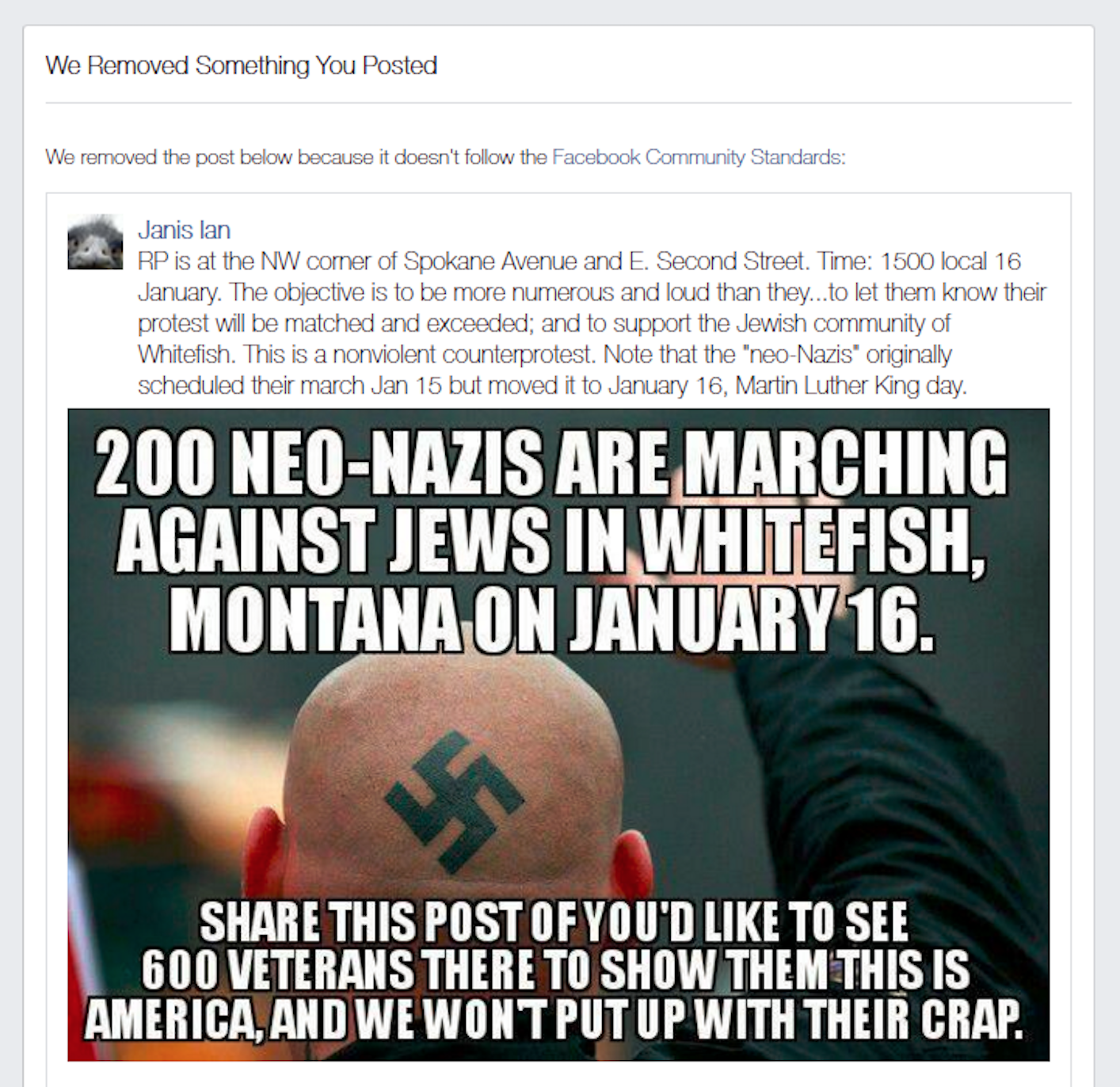

Facebook’s original decision: Took it down.

Facebook’s response: This does not violate Facebook policies. The company categorized its original decision – to remove the post – as a mistake.

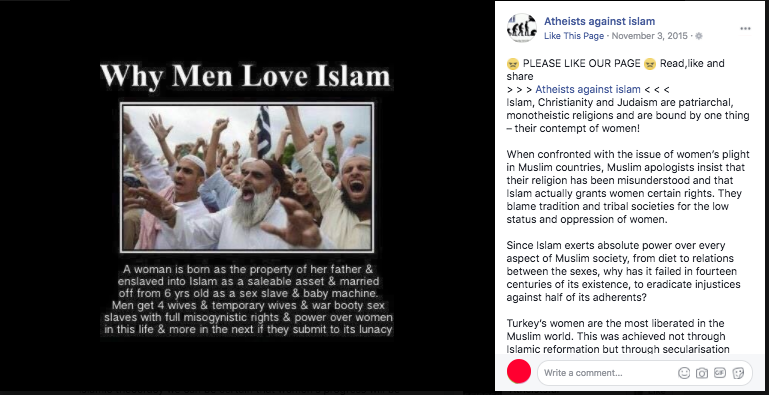

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the page up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies against hate speech, because it is not an attack on the members of a religion. There is no explicit attack against a protected group.

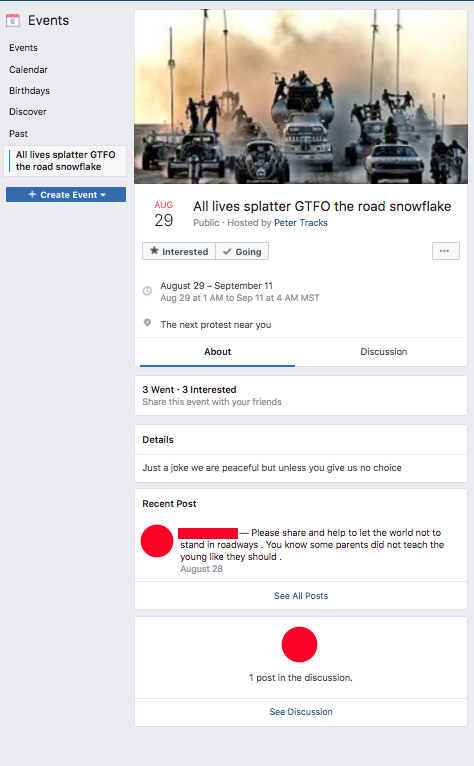

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the event up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies against mocking victims of violence or hate crimes. It was not initially taken down because the entire page was reported, rather than the post itself.

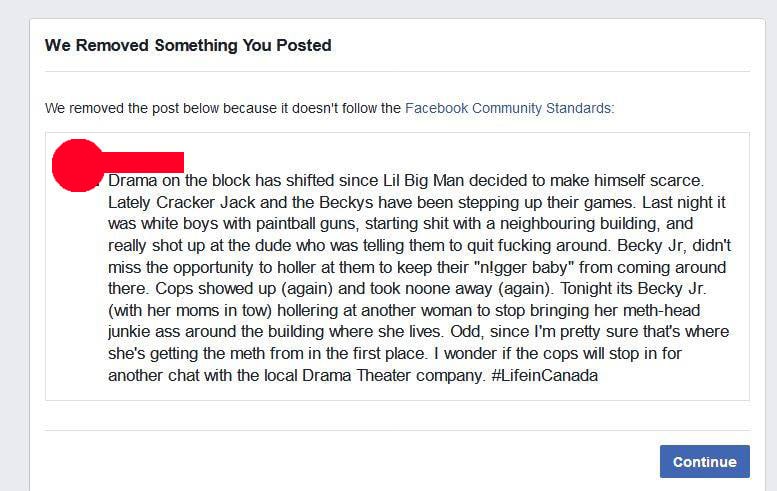

Facebook’s original decision: Took it down.

Facebook’s response: This violates Facebook policies, and it was correctly removed. The poster is negatively targeting people with the slur “cracker.”

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Left it up.

Facebook’s response: This was either not reported or deleted by its author. Due to user privacy reasons, Facebook would not share when a user deletes his or her own content, so it combined those two groups.

Facebook’s original decision: Left it up.

Facebook’s response: This was either not reported or deleted by its author. Due to user privacy reasons, Facebook would not share when a user deletes his or her own content, so it combined those two groups.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies. Hate speech protections do not apply to public figures.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies. The company says Black Lives Matter activist Chanelle Helm is a public figure, and thus standard hate speech protections do not apply to her.

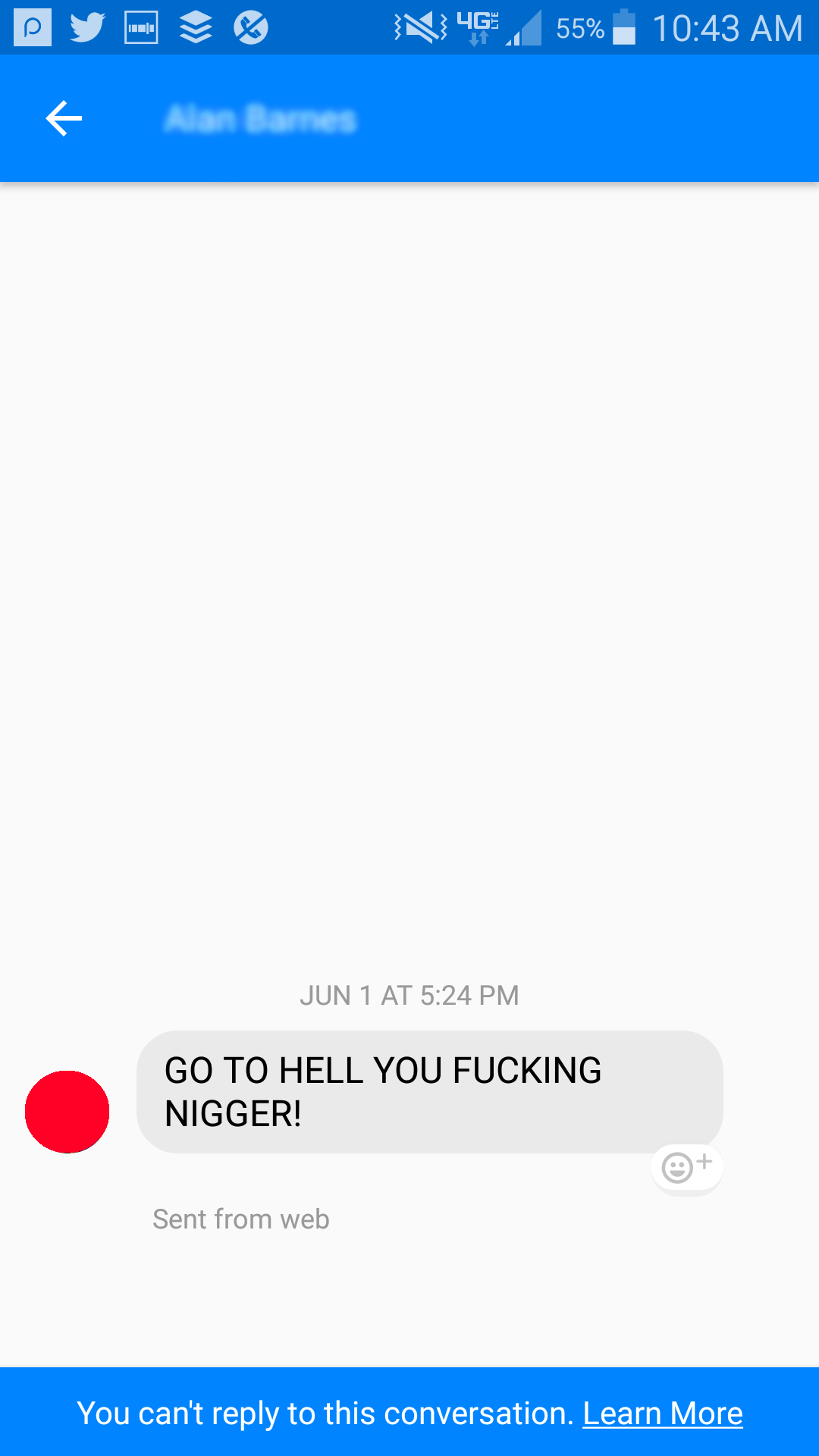

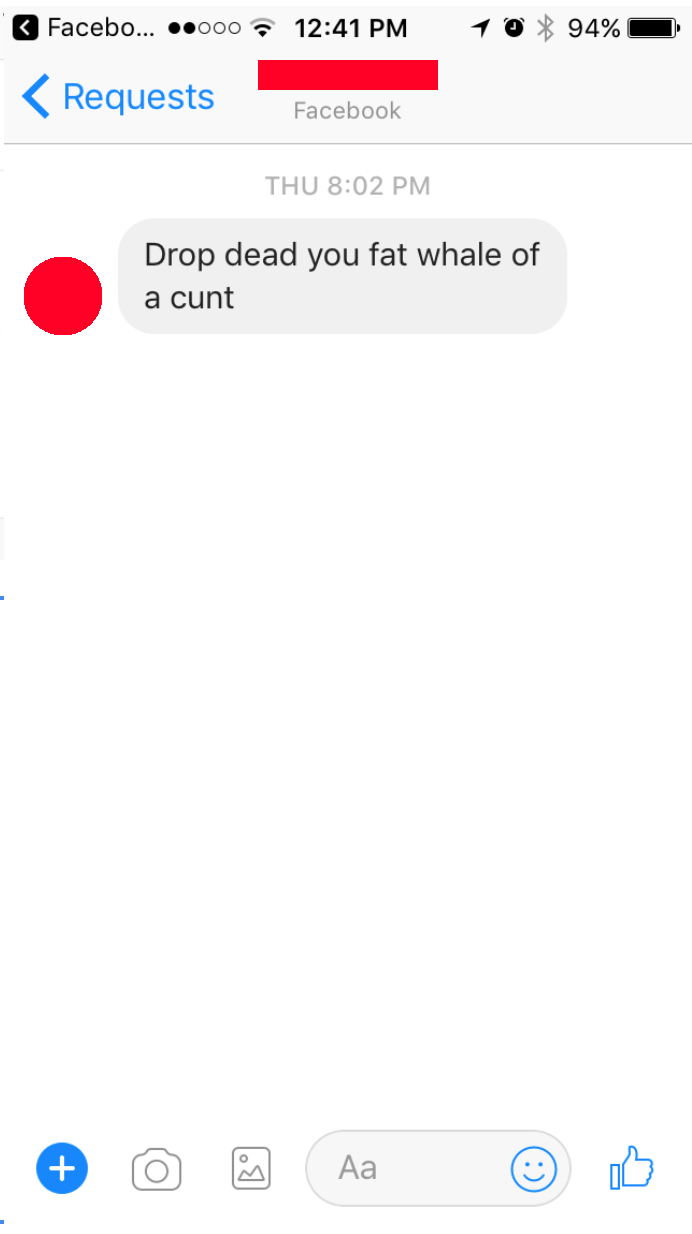

Facebook’s original decision: No action.

Facebook’s response: This does violate Facebook policies. The user did not report this message, but blocked the responsible account. Facebook is working to improve reporting and ignoring capabilities in messenger.

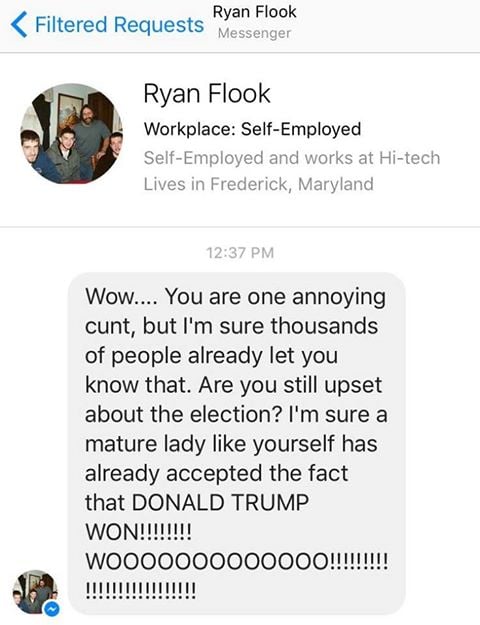

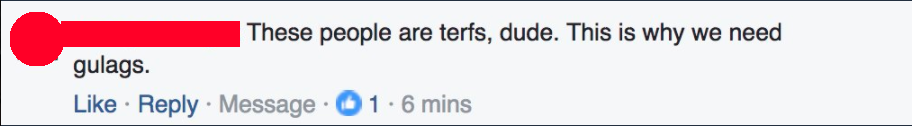

Facebook’s original decision: No action.

Facebook’s response: This does violate Facebook policies. The user did not report this message, but blocked the responsible account. Facebook said it is working to make it easier for users to report hate speech from within Messenger chats.

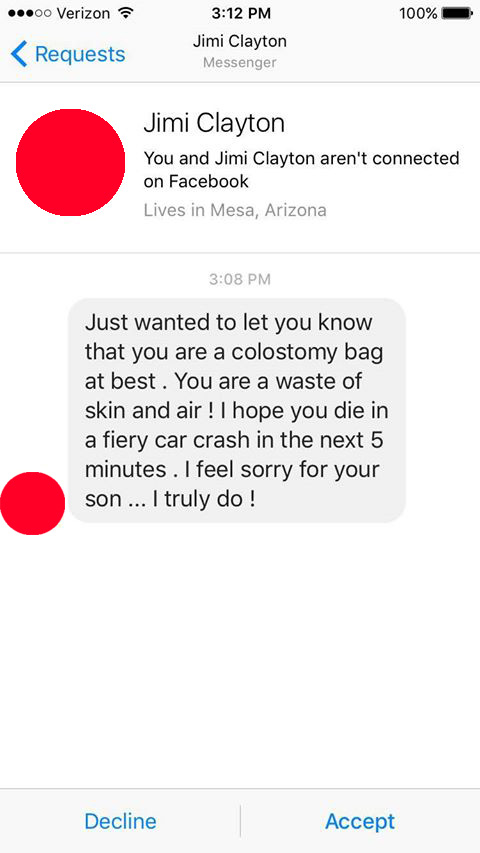

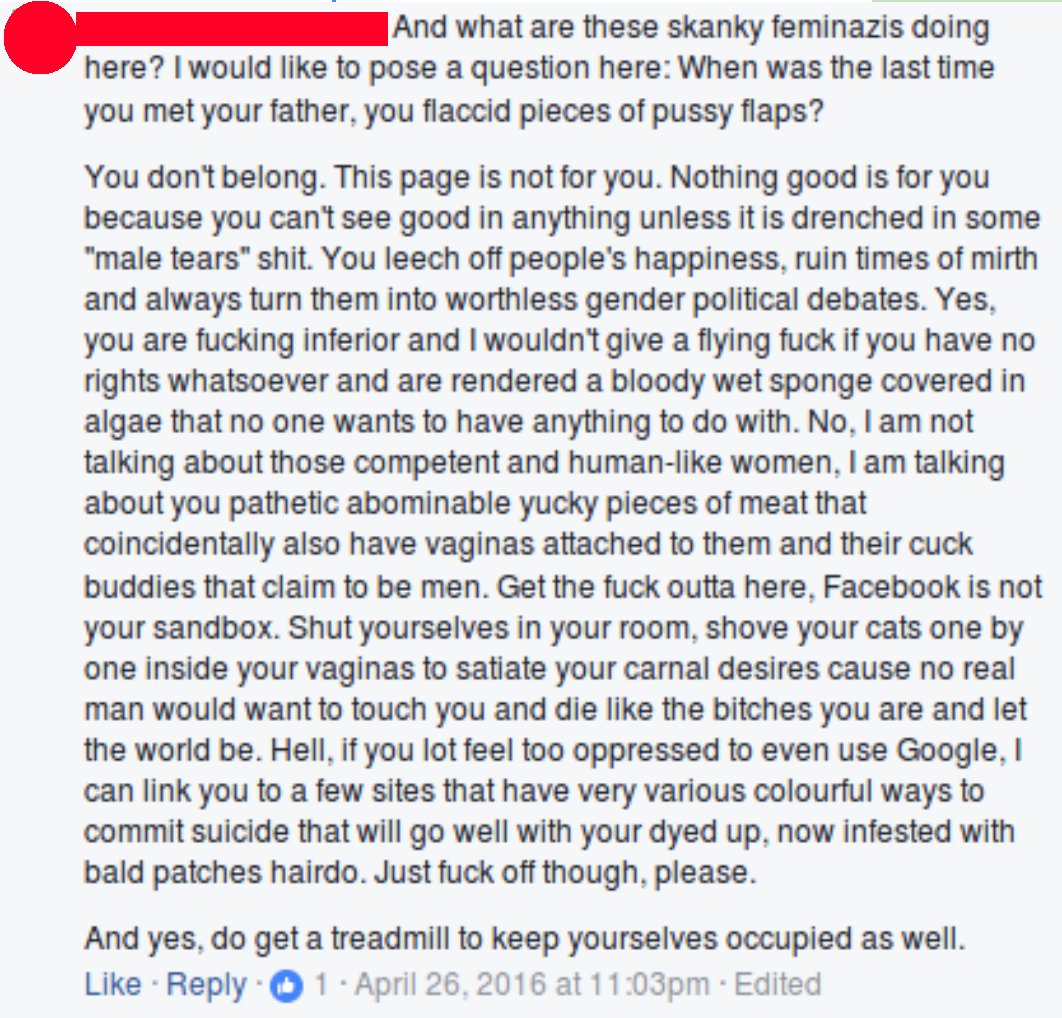

Facebook’s original decision: No action.

Facebook’s response: This does violate Facebook policies. The user did not report this message, but blocked the responsible account. Facebook said it is working to make it easier for users to report hate speech from within Messenger chats.

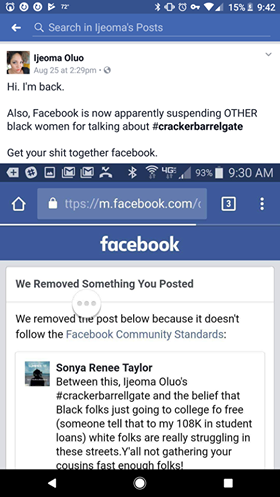

Facebook’s original decision: No action.

Facebook’s response: This does violate Facebook policies. The user did not report this message, but blocked the responsible account. Facebook said it is working to make it easier for users to report hate speech from within Messenger chats.

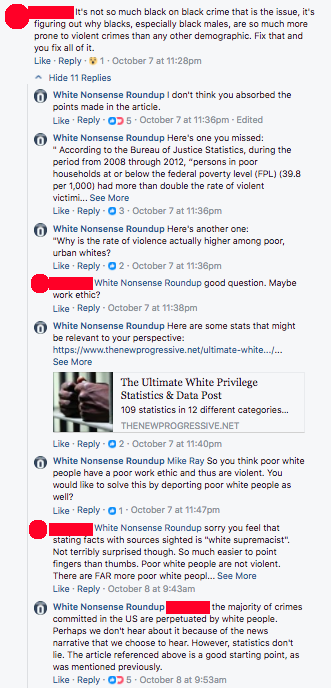

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies. It does not explicitly attack a protected category of people.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the comment up – as a mistake. It was removed after ProPublica asked for an explanation.

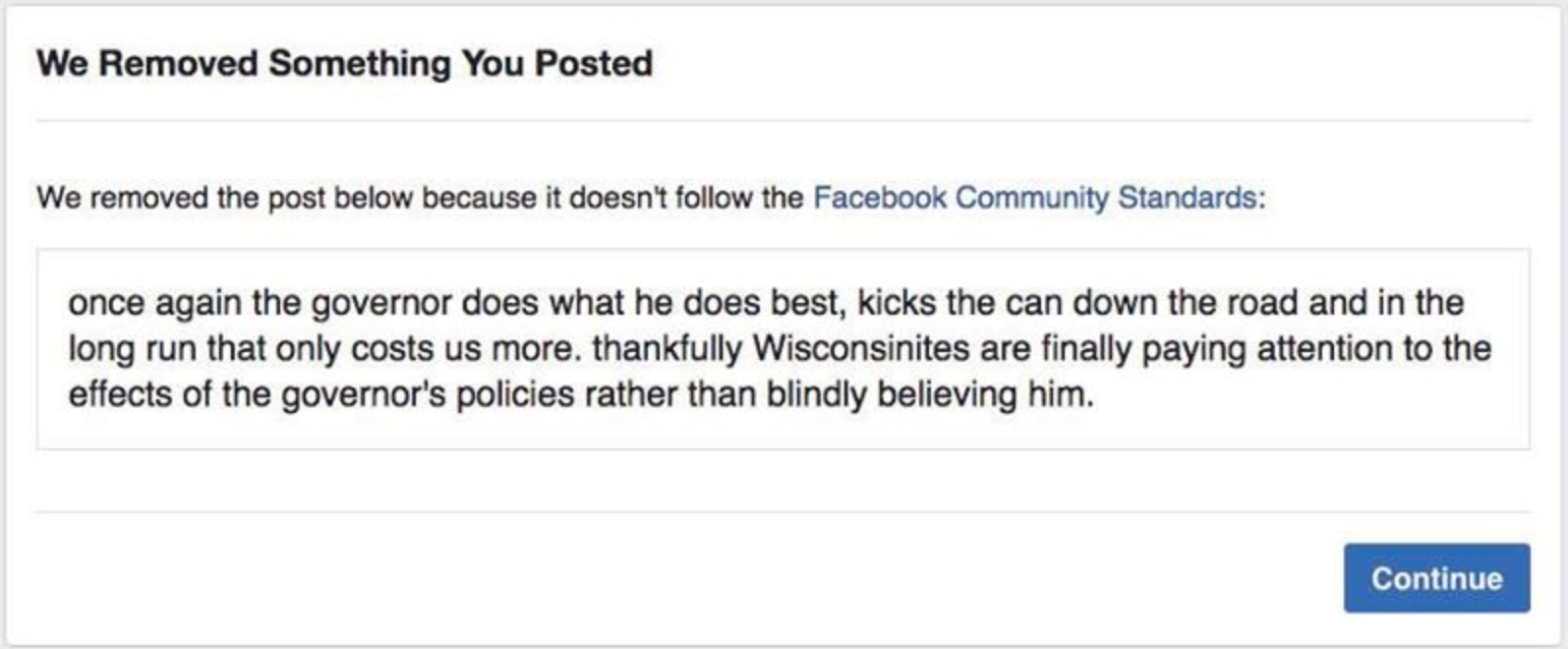

Facebook’s original decision: Took it down.

Facebook’s response: This does not violate Facebook policies. Facebook says this decision – to take down the post – was a mistake.

Facebook’s original decision: Left it up.

Facebook’s response: This does not violate Facebook policies, because attacking the members of a religion is not acceptable, but attacking the religion itself is acceptable.

Facebook’s original decision: Took it down.

Facebook’s response: This does not violate Facebook policies. Facebook says this decision – to take down the post – was a mistake. The caption attached to the photo is condemning sexual violence, and therefore the content, taken as a whole, is acceptable.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Took it down.

Facebook’s response: This violates Facebook policies. It was removed after user reports.

Facebook’s original decision: Left it up.

Facebook’s response: This violates Facebook policies. The company categorized its original decision – to leave the post up – as a mistake. It was removed after ProPublica asked for an explanation.

Facebook’s original decision: Took it down.

Facebook’s response: Facebook apologized to the author for its original decision to take the post down. It has been restored.

Facebook’s original decision: Left it up.

Facebook’s response: This image does not violate Facebook policies because content that depicts, celebrates or jokes about non-consensual sexual touching violates rules on sexual violence and exploitation and criminal activity. Here, there is not enough context to demonstrate non-consensual sexual touching.

A Facebook page that was called “I acknowledge White Privilege exists.”

Facebook’s original decision: Took it down.

Facebook’s response: Facebook could not find this page and therefore could not comment.

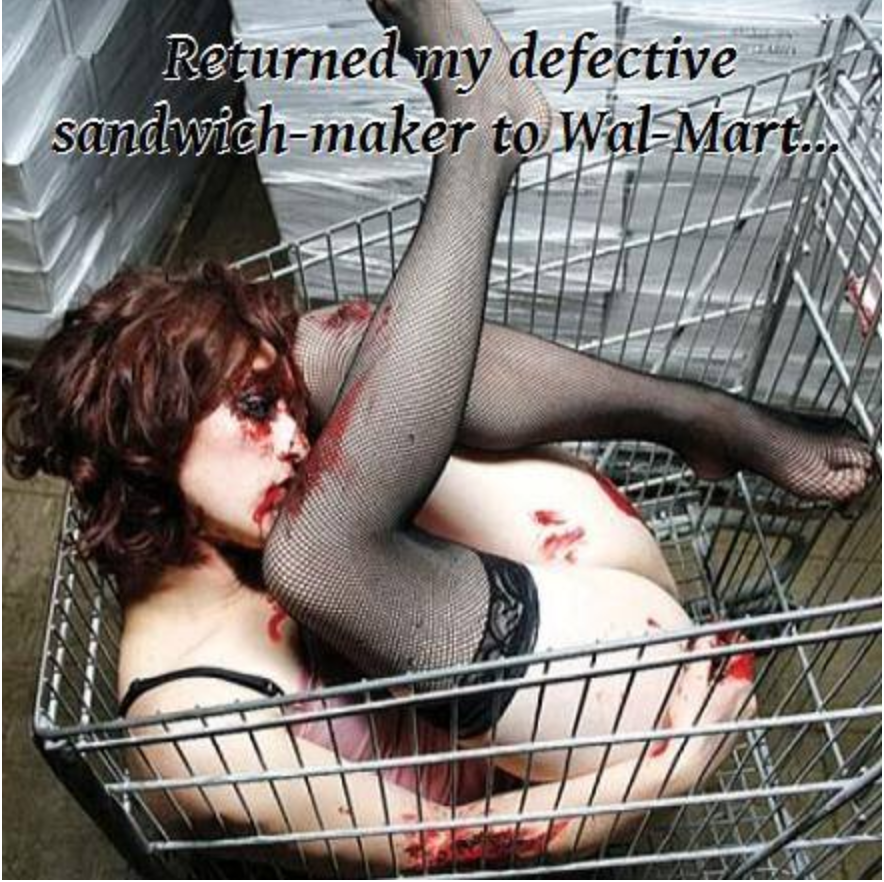

Facebook’s original decision: Took it down after multiple user reports.

Facebook’s response: This violates Facebook policies and has been removed. Facebook removes images that mock the victims of rape or non-consensual sexual touching, hate crimes, other serious physical injuries.